Meta AI Scientist Speak Out: Academia's 'Witch Hunt' Is Real And It’s A Problem

Hey there, folks. If you’ve been following the tech world, you’ve probably heard whispers—more like loud roars—about the growing tension between Meta AI scientists and academia. The phrase “witch hunt” has been thrown around a lot lately, and trust me, it’s not just some random drama. This is big, folks, and it’s shaking up the entire artificial intelligence (AI) community. So, buckle up because we’re diving deep into this heated debate. Meta AI scientists are speaking out, and their voices are getting louder.

Let’s get one thing straight: AI is no longer just a buzzword. It’s a game-changer, and Meta has been at the forefront of this revolution. But with great power comes great scrutiny, and academia isn’t holding back. Some say it’s necessary; others call it a witch hunt. Whatever side you’re on, one thing’s for sure: this conflict is real, and it’s affecting how AI research is conducted and perceived globally.

In this article, we’ll break down what’s happening, why it matters, and how it impacts the future of AI research. Whether you’re a tech enthusiast, a student, or just someone curious about the world of AI, this is a story you don’t want to miss. So, let’s dig in and uncover the truth behind the so-called “witch hunt” in academia.

Read also:Unveiling The Mystery Of Uiiucom A Comprehensive Guide

Table of Contents:

- Meta AI Scientist Overview

- What is Academia’s Witch Hunt?

- Meta AI Research: The Cutting Edge

- Why Academia is Criticizing Meta AI?

- How Scientists are Responding

- Impact on AI Research

- Trust and Authority in AI

- Long-Term Effects on Innovation

- Public Perception of Meta AI

- Final Thoughts

Meta AI Scientist Overview

Okay, let’s start with the basics. Who exactly are these Meta AI scientists? They’re not just any scientists; they’re the brains behind some of the most groundbreaking AI technologies we’ve seen in recent years. From natural language processing to computer vision, Meta AI has been leading the charge in advancing AI capabilities. But being at the top means facing scrutiny, and that’s where things get interesting.

Who Are the Key Players?

Meta employs some of the brightest minds in the AI field. These scientists have published countless papers, developed innovative algorithms, and pushed the boundaries of what AI can do. But with great innovation comes great controversy. Some of these scientists have found themselves caught in the crossfire of academic criticism, labeled as part of a “witch hunt” against corporate-funded research.

Let’s break it down:

- Yann LeCun: Chief AI Scientist at Meta and a pioneer in deep learning.

- Joelle Pineau: Head of Meta AI and a leading figure in ethical AI research.

- Demis Hassabis: While not directly affiliated with Meta, his work in AI ethics has influenced many Meta scientists.

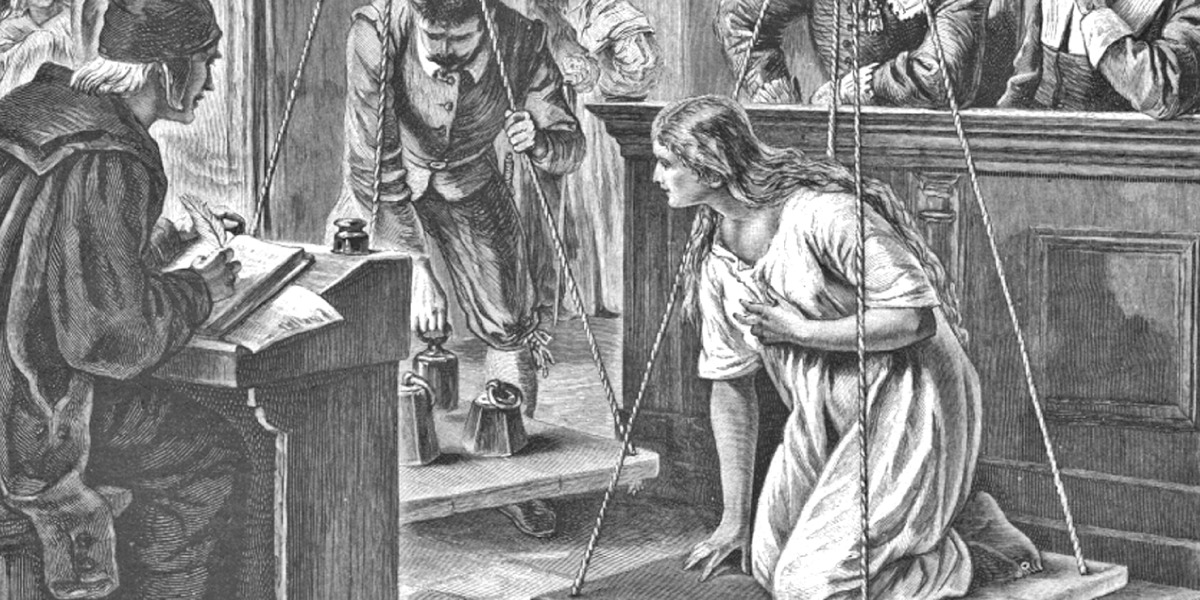

What is Academia’s Witch Hunt?

Now, here’s where things get spicy. The term “witch hunt” has been used by several Meta AI scientists to describe the current state of academic criticism. But what does it really mean? Essentially, some scientists feel that academia is unfairly targeting corporate-funded research, labeling it as biased or unethical without fully understanding its context.

Let me give you an example. Imagine you’re working on a project that could revolutionize healthcare through AI. You’ve spent years developing it, pouring your heart and soul into the research. But then, an academic paper comes out criticizing your work, claiming it lacks transparency or ethical considerations. Sound familiar? That’s the kind of situation many Meta AI scientists find themselves in.

Read also:Who Is The Skinniest Man In The World The Untold Story Behind Extreme Thinness

Why the Criticism?

There are a few reasons why academia is so critical of corporate-funded research:

- Transparency: Academics argue that corporate research is often shrouded in secrecy, making it difficult to assess its validity.

- Ethics: There’s a growing concern about the ethical implications of AI, especially when it’s developed by companies with profit-driven motives.

- Bias: Critics claim that corporate research is inherently biased, favoring solutions that benefit the company rather than the public.

Meta AI Research: The Cutting Edge

Despite the criticism, Meta AI continues to push the boundaries of what’s possible. Their research spans a wide range of fields, from healthcare to climate science. Let’s take a closer look at some of their most impressive projects:

Healthcare Innovations

Meta AI has developed several tools that could revolutionize healthcare. For example, their work on medical imaging using AI has the potential to improve diagnostic accuracy and reduce costs. But, as with any new technology, there are concerns about data privacy and ethical implications.

Climate Science

Another area where Meta AI is making waves is climate science. By leveraging AI to analyze large datasets, they’re helping scientists better understand climate patterns and predict future changes. However, some academics argue that these models may oversimplify complex systems, leading to inaccurate predictions.

Why Academia is Criticizing Meta AI?

Let’s be real for a second. Academia has always been skeptical of corporate research, and for good reason. In the past, we’ve seen cases where profit-driven motives have led to unethical practices. But is Meta AI guilty of the same? That’s the million-dollar question.

According to a report by the National Academy of Sciences, corporate-funded research often lacks the same level of scrutiny as academic research. This raises concerns about the validity and reliability of the findings. However, Meta AI scientists argue that their work is subject to rigorous peer review and adheres to the highest ethical standards.

How Scientists are Responding

So, how are Meta AI scientists responding to these criticisms? They’re not backing down, that’s for sure. Many have taken to social media and academic forums to defend their work and call out what they see as unfair criticism.

Yann LeCun, for example, has been vocal about the importance of corporate-funded research. He argues that companies like Meta have the resources and expertise to tackle some of the world’s biggest challenges. “Academia needs to stop being so critical and start collaborating more,” he said in a recent interview.

Building Bridges

Some scientists are advocating for a more collaborative approach between academia and industry. By working together, they believe we can address the challenges of AI research more effectively. This could involve sharing data, resources, and expertise to ensure that research is both transparent and impactful.

Impact on AI Research

So, what does all of this mean for the future of AI research? Well, it’s complicated. On one hand, the criticism could lead to more rigorous standards and greater transparency. On the other hand, it could stifle innovation by making it harder for corporate researchers to publish their work.

According to a study published in Nature, the number of AI-related patents filed by corporations has been steadily increasing over the past decade. This suggests that corporate-funded research is not only continuing but thriving. However, the same study also notes that academic papers on AI have seen a decline in citations, indicating a potential disconnect between academic and corporate research.

Trust and Authority in AI

Trust is a crucial factor in the world of AI. Both academia and industry need to be transparent about their research practices and ensure that their work is ethical and reliable. But how do we build trust in a world where skepticism runs high?

One solution is to establish independent review boards that can assess the validity and ethical implications of AI research. These boards could include experts from both academia and industry, ensuring that all perspectives are considered.

The Role of Transparency

Transparency is key to building trust. Meta AI has taken steps to make their research more open and accessible, such as publishing their code and data. But some argue that more needs to be done to ensure that their work is truly transparent.

Long-Term Effects on Innovation

Looking ahead, the tension between academia and corporate-funded research could have lasting effects on innovation. If the criticism continues unchecked, it could lead to a decline in corporate investment in AI research. On the flip side, it could also lead to greater collaboration and more rigorous standards.

As AI continues to evolve, it’s important that we strike a balance between innovation and ethics. This means fostering an environment where both academia and industry can thrive and contribute to the greater good.

Public Perception of Meta AI

Let’s not forget about the public. How do everyday people perceive Meta AI and its research? According to a recent survey, most people are aware of Meta’s work in AI but remain skeptical about its ethical implications. This highlights the need for better communication and transparency from Meta AI.

Some argue that Meta needs to do more to engage with the public and address their concerns. This could involve hosting public forums, publishing layman-friendly summaries of their research, and actively seeking feedback from the community.

Final Thoughts

So, there you have it. The debate between Meta AI scientists and academia is far from over, and it’s a conversation that affects us all. Whether you’re a scientist, a student, or just someone curious about AI, this is an issue worth paying attention to.

As we’ve seen, the criticisms leveled against Meta AI are not without merit. But neither are the arguments made by the scientists themselves. The key is finding a way to bridge the gap between academia and industry, ensuring that AI research is both innovative and ethical.

So, what can you do? Start by staying informed. Read up on the latest research, follow the debates, and form your own opinions. And don’t forget to share this article with your friends and colleagues. Together, we can help shape the future of AI research.

Article Recommendations